Willis tower, Chicago, August 2023

Introduction to Infrastructure as Code - deploying a virtual machine

In previous posts we spun up virtual machines from the consoles of cloud providers websites. However this has the disadvantage that it can be time consuming and the steps involved complicated and easy to forget. By contrast directly specifying the cloud computing that we want to access in code is more explicit and easier to reuse - it can also be version controlled. We’ll therefore use a simple code based approach to create some infrastructure using Amazon Web Services' (AWS) CloudFormation. CloudFormation was also used in the previous post involving Serverless to set up a series of lambda jobs.

We have the following objectives:

- Spin up a virtual machine (An Amazon EC2 instance) of our choosing

- Give the EC2 instance access to a specified S3 bucket

- Give restricted inbound access to the instance i.e. allow SSH access only from a specified IP address

In this post we do this by:

1. A CloudFormation template that launches an EC2 instance

This specifies:

1.1 The inputs that the template uses

1.2 An Identity and Access Management (IAM) role to give S3 access

1.3 The type of EC2 to be launched and the rules attached to it

1.4 A security group to allow restricted SSH access

2. Deploying the template to create the instance and then deleting it

What follows assumes that an AWS key pair has been generated and that the AWS command line interface (CLI) is installed with permissions to create EC2 instances and manage bucket access.

The infrastructure created by the template can, with a bigger instance, be used to temporarily create an EC2 to train a machine learning model. A python script running on the instance using the package Boto3 can access a dataset saved to the S3 bucket and then save the model checkpoints generated during training to the bucket. The example here is not really designed to create a longer-term running EC2 as to do that would involve handling access from varying IP addresses and locking down the instance internally.

The usual cloud warning Using cloud services can be expensive and linking cloud services together potentially raises security issues. What is being spun up and access given to should be checked before deploying. Cloud services should be monitored and turned off to avoid unnecessary costs.

1. A CloudFormation template that launches an EC2 instance

The following CloudFormation template in yaml format specifies the services we want to use.

In this example the template is named as launch_ec2.yml. The treatment of IAM roles used here is based on that in Eduardo Rabelo’s post on IAM and

CloudFormation.

AWSTemplateFormatVersion: '2010-09-09'

Parameters:

LocalIPAddress:

Type: String

Description: Local IP address

S3BucketName:

Type: String

Description: Name of the S3 bucket to access

Resources:

AppDevRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Statement:

- Effect: Allow

Principal:

Service:

- ec2.amazonaws.com

Action:

- sts:AssumeRole

Policies:

- PolicyName: AppDevRolePolicy

PolicyDocument:

Statement:

- Effect: Allow

Action:

- s3:ListBucket

Resource:

- !Sub arn:aws:s3:::${S3BucketName}

- Effect: Allow

Action:

- s3:PutObject

- s3:GetObject

Resource:

- !Sub arn:aws:s3:::${S3BucketName}/*

AppDevEC2InstanceProfile:

Type: AWS::IAM::InstanceProfile

Properties:

Roles:

- !Ref AppDevRole

EC2Instance:

Type: AWS::EC2::Instance

Properties:

InstanceType: t2.micro # Replace with the instance type wanted

ImageId: ami-0b9932f4918a00c4f # Replace with the Amazon Machine Image (AMI) ID wanted

KeyName: cloud_key

SecurityGroups:

- !Ref SSHSecurityGroup

IamInstanceProfile: !Ref AppDevEC2InstanceProfile

AvailabilityZone: eu-west-2a

SSHSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: Enable SSH access only from my iP address

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: 22

ToPort: 22

CidrIp: !Ref LocalIPAddress

This has the following stages:

1.1 The inputs that the template uses

Parameters:

LocalIPAddress:

Type: String

Description: Local IP address

S3BucketName:

Type: String

Description: Name of the S3 bucket to access

This sets up two parameters that we will pass to AWS when creating the stack.

The IP address that we want the instance to be accessible from LocalIPAddress and the S3 bucket

that we want the EC2 to be able to access S3BucketName. The bucket is assumed to already exist.

When the stack is created we pass these values to it using the following command line argument format, here shown for the IP address we want to give access to

--parameters ParameterKey=LocalIPAddress,ParameterValue='IP address'.

1.2 An Identity and Access Management (IAM) role to give S3 access

AppDevRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Statement:

- Effect: Allow

Principal:

Service:

- ec2.amazonaws.com

Action:

- sts:AssumeRole

Policies:

- PolicyName: AppDevRolePolicy

PolicyDocument:

Statement:

- Effect: Allow

Action:

- s3:ListBucket

Resource:

- !Sub arn:aws:s3:::${S3BucketName}

- Effect: Allow

Action:

- s3:PutObject

- s3:GetObject

Resource:

- !Sub arn:aws:s3:::${S3BucketName}/*

AppDevEC2InstanceProfile:

Type: AWS::IAM::InstanceProfile

Properties:

Roles:

- !Ref AppDevRole

This sets up an:

-

IAM role

AppDevRolewithAssumePolicyDocumentthat gives an Amazon EC2 instance the right to perform certain actions specified in the subsequent IAM policy. An IAM role as distinct from an IAM user applies to the permissions of AWS resources, here EC2 instances, rather than the permissions of a person. -

IAM policy

AppDevRolePolicywhich specifies the permissions the role has. In this case it allows the EC2 instance to list the files (ListBucket) in the bucket identfied byS3BucketNameand to put (PutObject) and get (GetObject) objects in the bucket. The put and get commands require direct access to the contents of the bucket. This is why the resource for these actions has the * wildcard which represents the objects in the bucket, as opposed to the resource for the list action which is just the bucket itself. -

IAM InstanceProfile

AppDevEC2InstanceProfilewhich attaches the role to the EC2 instance when it launches.

!Sub is used to identify the resources, as distinct from !Ref, as we are substituting the bucket name into a string to identify a resource rather than just referencing it outright.

1.3 The type of EC2 to be launched and the rules attached to it

EC2Instance:

Type: AWS::EC2::Instance

Properties:

InstanceType: t2.micro # Replace with the instance type wanted

ImageId: ami-0b9932f4918a00c4f # Replace with the Amazon Machine Image (AMI) ID wanted

KeyName: cloud_key

SecurityGroups:

- !Ref SSHSecurityGroup

IamInstanceProfile: !Ref AppDevEC2InstanceProfile

AvailabilityZone: eu-west-2a

This part of the yaml creates an EC2 instance in the eu-west-2a area (which is London). Here the size is set to small t2.micro, with a standard ubuntu image specified in the ImageID.

The AWS key pair that is needed to SSH into the instance is specified in the KeyName variable (The key in this example is called cloud_key, with the corresponding key file used to SSH cloud_key.pem).

The template attaches a security group SSHSecurityGroup which manages how we can access the instance (this is defined in the next part of the yaml and only allows SSH access from the

IP address that we pass to the template when we launch it). The IAM profile created previously is attached to the EC2 to give access to the bucket.

1.4 A security group to allow restricted SSH access:

SSHSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: Enable SSH access only from my iP address

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: 22

ToPort: 22

CidrIp: !Ref LocalIPAddress

The default setting for an EC2 instance when it is launched is that all ports are locked down.

Here we allow one way into the EC2 instance by adding a SecurityGroupIngress on the SSH port 22 (To allow outbound ports, the corresponding term is SecurityGroupEgress).

The FromPort, ToPort specify the range of ports that can be accessed - here from port 22 to port 22 i.e. that port only.

CidrIp (Classless Inter-Domain Routing IP address) specifies the IP address that is allowed to access this port. This address is set in the variable LocalIPAddress that we pass

to the CloudFormation template when it is deployed.

2. Deploying the template to create the instance and then deleting it

To launch an EC2 instance restricted to the IP address that the template is deployed from (with access to an S3 bucket test-bucket-cf-store-jd due to an IAM role) involves an AWS CLI command of the

form:

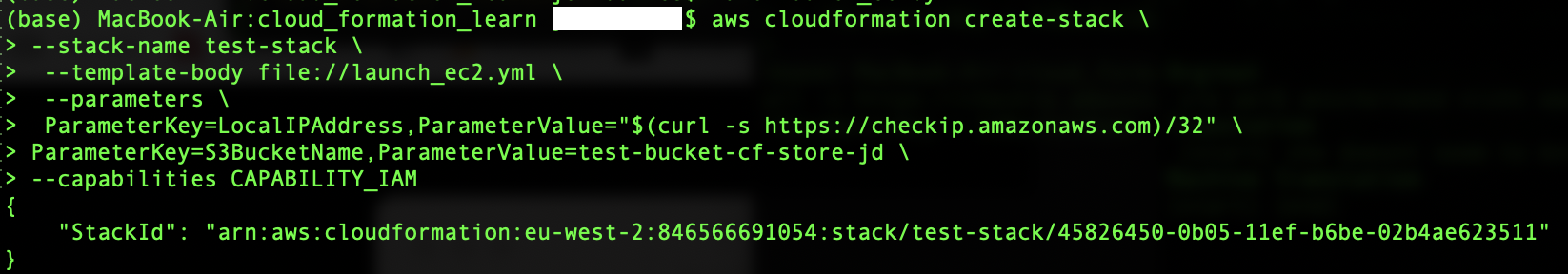

$ aws cloudformation create-stack \

--stack-name test-stack \

--template-body file://launch_ec2.yml \

--parameters \

ParameterKey=LocalIPAddress,ParameterValue="$(curl -s https://checkip.amazonaws.com)/32" \

ParameterKey=S3BucketName,ParameterValue=test-bucket-cf-store-jd \

--capabilities CAPABILITY_IAM

In the example the stack is given a name test-stack. The yaml shown above is saved as launch_ec2.yml in a folder called

cloud_formation_learn and is referenced from the –template-body. Here we use the curl to the AWS site https://checkip.amazonaws.com to automatically detect the IP we are running the template from

and then pass it as the ParameterValue for LocalIPAddress. The name of the S3 bucket that we are granting access to is

passed as the parameter value corresponding to S3BucketName. To make the change to IAM we also pass CAPABILITY_IAM with the capabilities flag.

The screen shot below shows an example of what is returned when we run this (Some computer specific information has been removed):

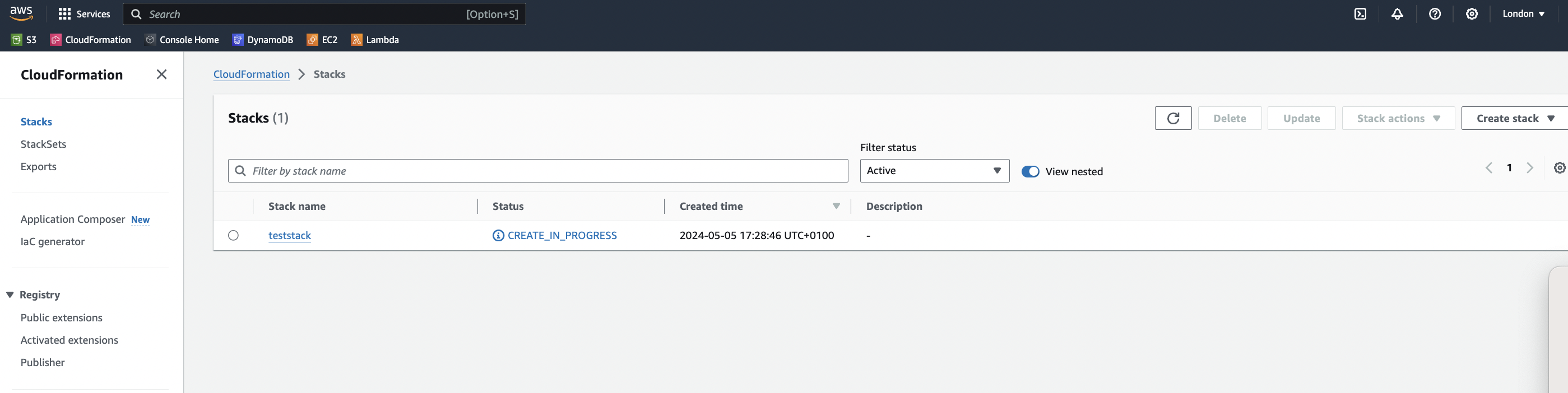

The CloudFormation stack launches (shown here from the CloudFormation page of the AWS console).

The CloudFormation stack launches (shown here from the CloudFormation page of the AWS console).

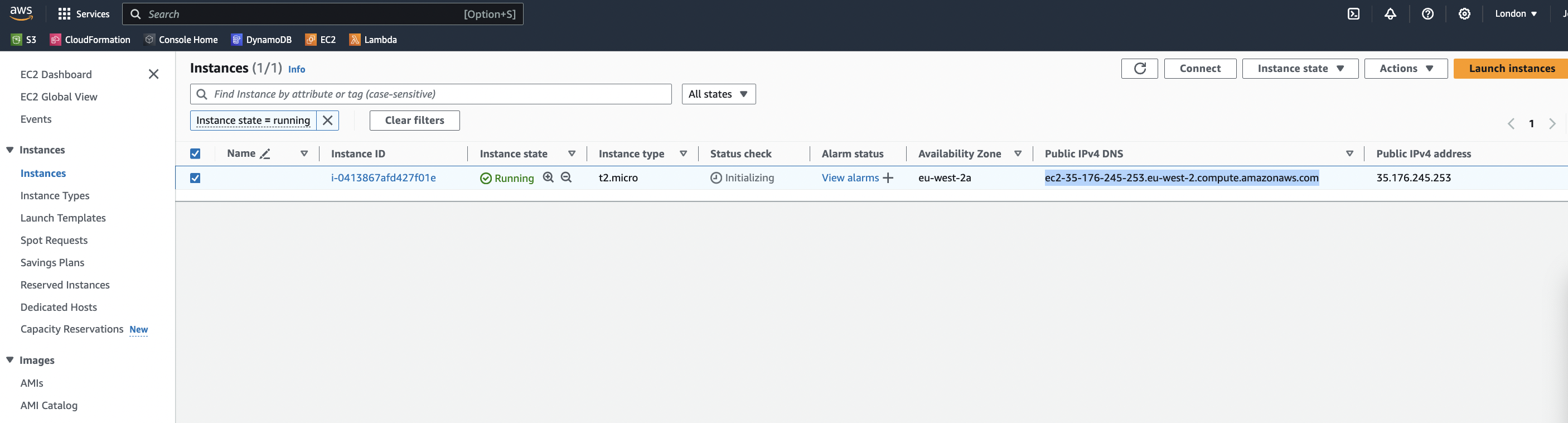

The corresponding EC2 instance is created and starts running (shown from the EC2 page)..

The corresponding EC2 instance is created and starts running (shown from the EC2 page)..

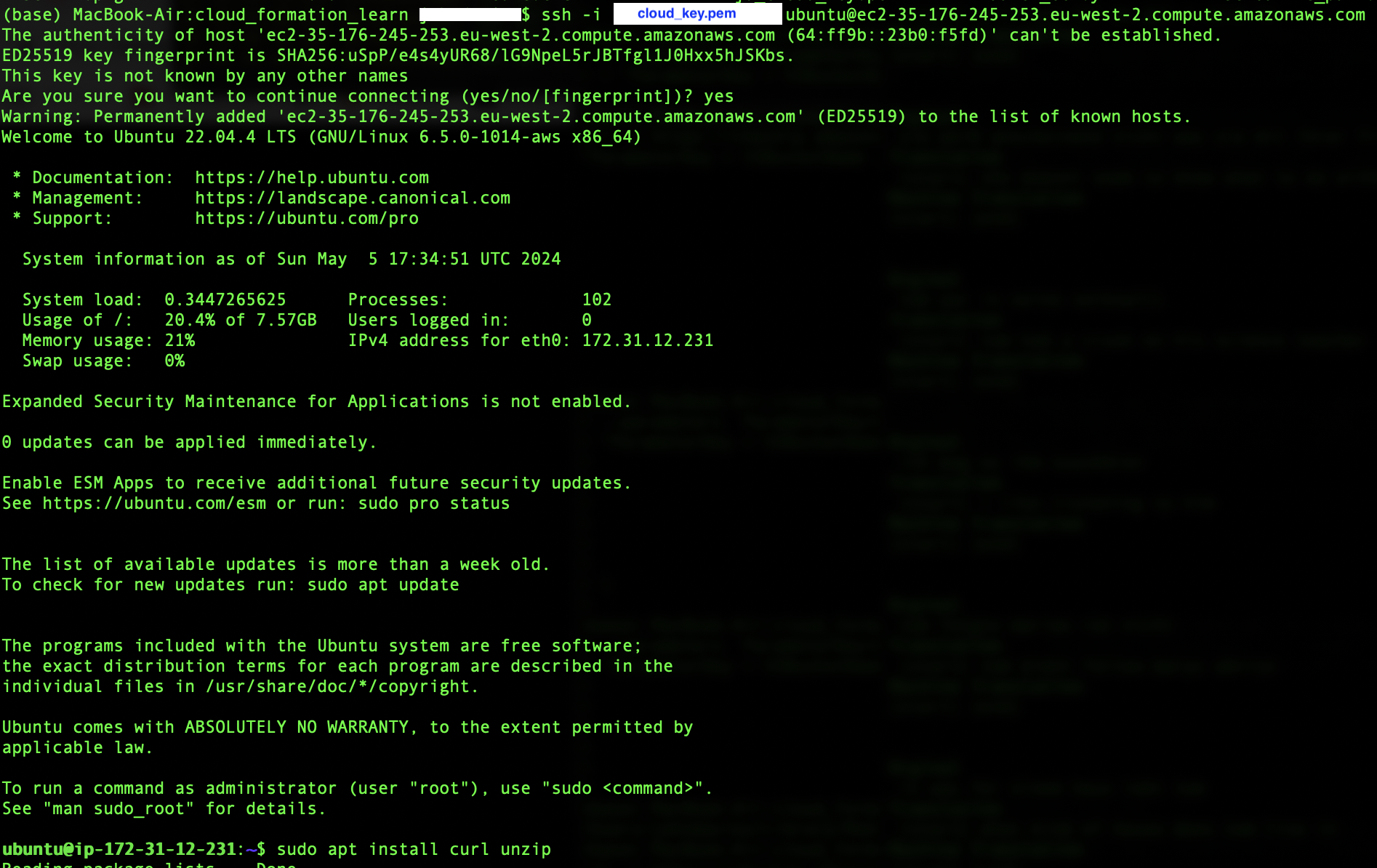

We get its IP address and login to it with the key identified when we set up the stack, appending ubuntu in front of the address as this is the operating system selected.

We get its IP address and login to it with the key identified when we set up the stack, appending ubuntu in front of the address as this is the operating system selected.

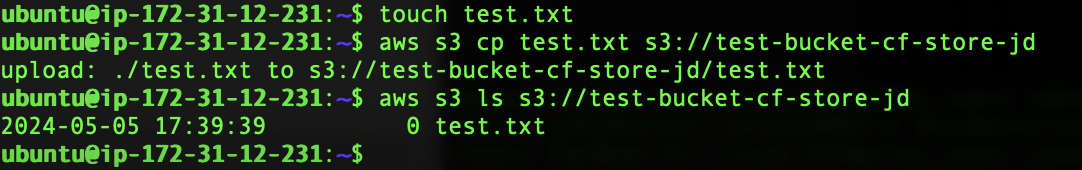

After installing the AWS CLI on the instance, to check that the bucket rules work, we create a file test.txt on the EC2, copy it to the bucket and list the bucket’s contents from the instance.

The following command provides details on the stack that has been created (or all stacks if the –stack-name part is omitted):

$ aws cloudformation describe-stacks --stack-name test-stack

The EC2 created will keep running and incurring costs so we want to be able to delete it when our usage ends. This can be done manually from the Delete and the Instance state tabs

respectively in the CloudFormation and EC2 console shown above. Alternatively, from the command line the following will delete the stack that has been created.

$ aws cloudformation delete-stack --stack-name test-stack

Cloud posts

Cloud 1: Introduction to launching a Virtual Machine in the Cloud

Cloud 2: Getting started with using a Virtual Machine in the Cloud

Cloud 3: Docker and Jupyter notebooks in the Cloud

Cloud 5: Introduction to deploying an app with simple CI/CD

Cloud 6: Introduction to Infrastructure as Code using CloudFormation

Cloud 7: Building an API with Lambda, Docker and CloudFormation

Cloud 8: Automating publishing an Android app to Google Play with GitHub Actions

References

Eduardo Rabelo (2023), AWS IAM Roles with AWS CloudFormation, AWS Fundamentals.

Andreas and Michael Wittig, ‘Amazon Web Services in action’, Manning.