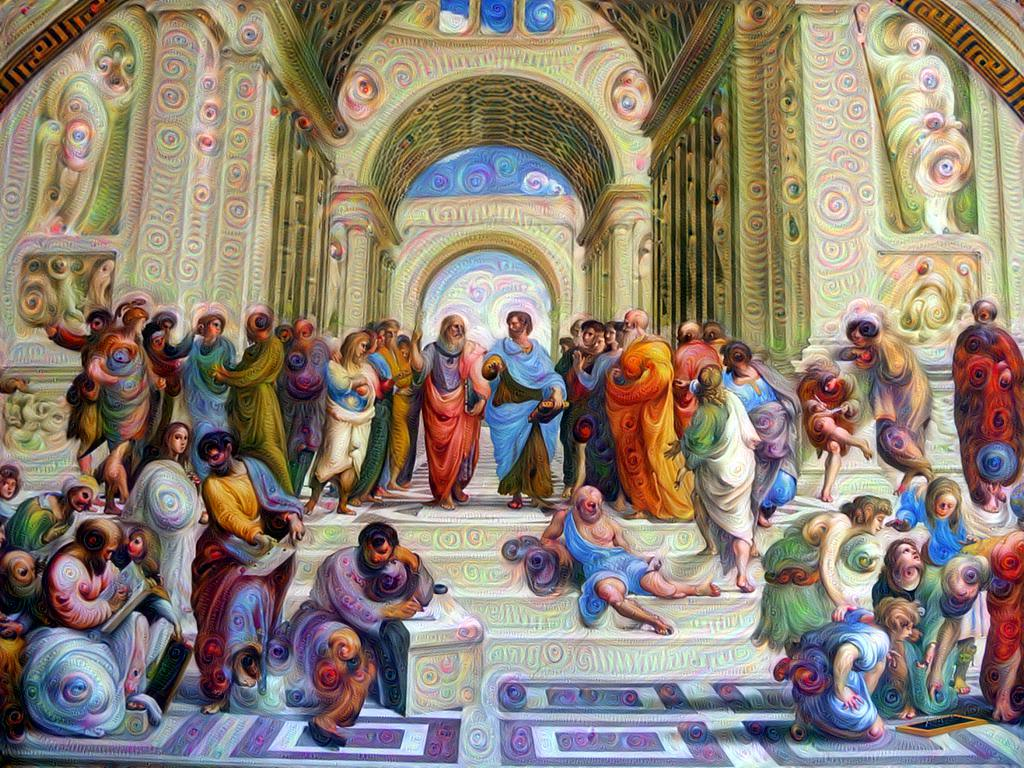

An algorithmic Deep Dream at the school of Athens, John Davies

From algorithmic creations to transforming galleries

Art is free. It doesn’t need to represent anything. It doesn’t need to be beautiful. It doesn’t need to be an object. It doesn’t need to be new. Despite these liberations, it is notable how comparatively little artists' tools have changed. Particularly when compared to the digital revolution’s effect on many other creative domains, with entire supply chains collapsing to a laptop, some servers and an internet connection. This relative detachment from digital technology is unlikely to last. Here are three interrelated ways digital could radically affect art in the decades to come.

1. Scaling art and the gallery: The whole notion of what a gallery is could change

It is easy to forget that public galleries are, in the greater scheme of things, a relatively new phenomena. The first purpose built public gallery in England, Dulwich Picture Gallery, opened in 1811. From the 1850s onwards art began to be widely photographed and extensive plaster copies of famous sculptures (such as those in the cast courts at the Victoria and Albert museum) have been around in private collections since the 18th century. [1] People still though made long journeys to see the originals. Rather more recently the Google cultural institute has been digitising cultural collections around the world in high resolution and making them available online. It seems plausible that such digital experiences, which are likely to become much more immersive and interactive, could become increasingly widespread and influential.

Digital access to art addresses several issues. Major galleries face the challenge of providing access to growing collections that already frequently exceed display space. They also face rising visitor numbers which can lead to overcrowding and reduced visit quality. In this context, digital technology can potentially create a peaceful, on demand, private experience of public art. This isn’t just about improving an existing experience though, it’s also about using technology to create completely new kinds of art at scale. An example of this is the recent proposal of Joel Gethin Lewis to create an artwork the size of India which people could interact with by phone throughout the country using augmented reality. [2] These trends imply that the art spaces of the future will not just be the neo-classical pediments of museums or the white walls of the modern gallery, but also the cushioned rooms of virtual reality and the open spaces of augmented reality.

The cast courts at the V&A - a physical precursor to the gallery’s digital future?

The cast courts at the V&A - a physical precursor to the gallery’s digital future?

2. About us: We will have a more active role in artistic experiences and they will become more personalised

The communication of the artist’s vision and our own personal response to it is central to art, but technology offers us the opportunity to be much more actively involved in this process. Personalisation is already an important part of certain kinds of performance art, for example Tino Seghal’s This Progress where the visitor is led through a gallery by a series of guides at successively older stages in life while discussing progress. [3] To say nothing of the tradition of using (chemical) technology to enhance personal artistic experiences: Aldous Huxley’s mescaline driven insights into his art book collection in the Doors of Perception, the psychedelic movement in the 1960s and the role of drugs in aspects of dance culture among others. [4] In the digital realm, interactivity is the basis of computer games and online we experience a stream of content personalised to us through recommendation algorithms based on our data. Algorithms which are often designed to encourage us to spend time or money on sites.

In an artistic context we can already see how technology is allowing greater scope for personalisation and new, heightened, artistic experiences. Small scale computers such as the Raspberry Pi are expanding the feasibility for interactive physical installations and Microsoft’s Kinect motion sensors have been repurposed in several art projects. [5] Recent examples of this kind of work are Chris Milk’s the Treachery of Sanctuary where people can transform themselves into feathered beings or the work of the artistic collective Team Lab who later in 2018 are opening a new digital art museum in Tokyo consisting of a suite of interactive installations.[6][7] As sensor technology becomes more sophisticated, the data on us gets richer, and more work is digitally delivered at scale, one can envisage there could be greater personalisation of artistic experiences beyond direct physical interactivity.

Gene Kogan’s cubist mirror at the Futurium Berlin, rendering the author in the style of Hokusai

Gene Kogan’s cubist mirror at the Futurium Berlin, rendering the author in the style of Hokusai

3. The new creatives: Art will not just be made by human artists

Creative skills are hard to automate and indeed there are likely to be more people doing creative work in future as a result, but it is plausible that we will see more artwork from non-human entities too. [8] [9] In fact algorithmic generation of art has been happening for some time. Focusing on some specific examples, while recognising that all computer programmes are algorithms of one sort or another (indeed if you consider computer games as an art form, all three trends discussed in this article are well established).

In 1960 Brion Gysin and Ian Sommerville collaborated on computer generated poetry and a major exhibition of computer art Cybernetic Serendipity took place in 1968 at the Institute of Contemporary Art in London. [10] In the 1980s the beautiful, infinite, visuals of the Mandelbrot set created by a simple algorithmic procedure attracted a cult following. More recently, Google has developed the Deep Dream algorithm where a programme that has been trained to recognise certain kinds of images or artistic styles can be used to create new imagery. In general terms the programme takes an image and enhances aspects of the picture so that it resembles things the programme has been trained to recognise, for example a version of Deep Dream can take as an input a picture in one artistic style and automatically manipulate it so it resembles another style it recognises.

[11] Another recent innovation are Generative Adversarial Networks (GANs) where two parts of the computer programme compete against each other. [12] One part tries to create a certain kind of target image, such as a human face, the other part is inspecting the image to see if it is a good representation of a target. The objective being for the generative part to fool the other part that it has produced a genuine version of the image. This has been found to be very effective at generating realistic images.

While it is practically and economically useful to be able to automate the transfer of styles and image creation e.g. creating visual effects, it must be acknowledged that not everyone would see this work as artistically interesting – although that’s something of a hazard in all forms of art. Nevertheless, clear progress on our understanding of image creation in computer vision/artificial intelligence has been made in recent years, and with far more resources going into this kind of research than ever before, it is likely that we will see a growing number of satisfying artistic creations from computers.

Unanswered questions

This leaves many questions unanswered. Aside from the fact this is all predicated on continuous technological progress on some quite hard problems, like VR sometimes making people sick, what about the economics and sociology of all this? the direct connection with the artist? and not tripping over power cables? Is it not striking that major tech companies are highly involved in all these trends, implying a new role for large corporates in art? Am I really talking about art, entertainment, or something we don’t have a word for yet? Aren’t heightened personalised experiences potentially also slightly dangerous? Where’s the soul in all this? While the three trends are plausible, and indeed are not new, it is not clear how this will all pan out. These are early days in digital technology in art. The computer, as Alan Turing conceived it, is only just over 80 years old. There is much more to come from the universal machine. Art may be free, but there is plenty of scope for its horizons to expand.

Acknowledgements Thanks go to the anonymous questioner at a talk who asked me whether the creative industries will change as much in the next 50 years as they have changed in the past 50 years. The above contains fragments from my original answer that are, with the wisdom of hindsight, rather clearer than what I actually came out with. Thanks also go to Eliza Easton, Sam Mitchell and Georgia Ward-Dyer for their helpful comments.

This post does not do justice to the work of the artists working in this space, for more on this see the catalogue for the 2014 Digital Revolution exhibition at the Barbican by McConnon, N., Bodman, C. and Admiss, D. (eds.), ‘A Touch of Code: Interactive Installations and Experiences’by Klanten, R., Ehmann, S. and Hanschke, V. (eds), ‘Digital Handmade: Craftsmanship and the New Industrial Revolution.’ by Johnston, L. (2015) and Ars Electronica.

References

[1] Gossman, L. ‘The Important Influence of Paintings in Early Photography’, Musee d’Orsay, Art Works And Their Photographic Reproduction

[2] There have been large scale artworks before such as Christo and Jeanne-Claude’s umbrellas project in California and Japan, but digital technology greatly expands the scale at which work can potentially done.

[3] https://www.artinamericamagazine.com/news-features/news/tino-sehgal-guggenheim-this-progress/

[4] Huxley, A. (1954), ‘The Doors of perception’.

[5] https://www.raspberrypi.org/blog/tag/art-installation/

[6] https://borderless.teamlab.art/

[7] Engasser, F. (2016), ‘A new artistic approach to virtual reality’, Nesta. Sharma, S. (2017), ’10 virtual reality artists you need to see to believe’.

[8] Bakhshi, H., Downing, J., Osborne, M. and Schneider, P. (2017), ‘The Future of Skills: Employment in 2030’, Pearson and Nesta.

[9] Ward-Dyer, G. (2017) ‘With AI emerging as a game-changer for the creative sector, the winner of the 2018 Turner Prize could be an AI and artist duo’, Nesta.

[10] ‘Brion Gysin Dream Machine’, New Museum Merrell. In a computer science context Christopher Strachey had earlier developed a programme that automatically generated love letters in 1953. https://www.newyorker.com/tech/elements/christopher-stracheys-nineteen-fifties-love-machine

[11] At a high level the programme is a network that when shown an image outputs the probability that it is a certain kind of object, such as a dog or a car. The networks consists of different layers which recognise different aspects of an image, such as its overall structure and texture. Deep dream turns this process on its head by manipulating the image to maximise the response of components of the network that recognise certain aspects of the image. Components of the image that look a bit like the things certain parts of the network are detecting, are changed to look even more like those things. For a discussion of Deep Dream see Inceptionism: Going Deeper into Neural Networks on the Google AI blog. For a TensorFlow implementation of Deep Dream that was used to create the Deep Dream in this post see the github repo by Alexander Mordvintsev here.

[12] Goodfellow, I (2016), ‘NIPS 2016 Tutorial: Generative Adversarial Networks’.